Guardrails & Gigawatts: Building the AI–Energy Flywheel at the Grid Edge an interview with Sam Altman

Altman’s Core Signals

The first signal is pragmatic and present: virtual co‑workers are here. We used some of the last wave of technology to deploy close to 300 virtual employees. In the last year we have given 234 of our IT engineers peered programmers, who can help them develop software fast, and more effectively. The teams that have these tools are still coming up to speed, but the trajectory is there. This means that the near‑term enterprise step‑change comes from agents and AI software engineers that execute tasks end‑to‑end. The teams that learn to reorganize their processes around agents, to feed them clean data, to review their outputs with discipline, will outperform their peers. The lesson for us: define the jobs that bog us down—code scaffolding, document prep, triage and routing, inspection prep—and let Grid Agents draft the first pass. We keep the last mile human, but we stop starting from zero.

The second signal is structural: compute and energy are converging. Even as models get leaner, demand rises faster. Over time, the cost of providing AI services converges to the cost of electricity. That puts utilities at the center of what’s possible. Our delivered price, our emissions profile, and our reliability are no longer just compliance metrics; they are competitive levers for an entire region’s AI economy. To say this another way, if we want California to remain at the center of world wide innovation, we as a utility are now a part of that cycle.

A third signal reframes ambition: throw clusters at hard problems. Altman’s point is that the next wave of enterprise value will come from organizations willing to invest in pointing serious industrial strength compute at the few wicked problems that matter most, not sprinkling it over everything. In our house, that looks like wildfire ignition and consequence minimization, feeder‑level orchestration of PV, storage, and EV charging, accelerated undergrounding and hardening decisions, and faster planning. Not a science fair. A shortlist and some very upbeat, yet serious individuals who are tasked with changing the company and the world.

The fourth signal is about moats. Many traditional moats erode when intelligence is cheap and abundant. What endures are unique operational data, real‑time integration with the physical world, and speed of adaptation. Those are local, lived advantages. They’re built by crews who know the territory, by systems wired into our grid, and by a culture that can take an idea from “science fiction” to “in production” through “operationally sound” with safety and auditing in place.

The fifth signal acknowledges the social horizon: a deflationary arc and a need for guardrails. Productivity will jump in the 2030s, but distribution and access will matter. In practice, that means designing with communities, being transparent about model use in safety‑critical contexts, and keeping people in the loop where stakes are high.

Which leads to the sixth signal: human‑in‑the‑loop remains wise. People still respond to people in caring and high‑consequence contexts. Our design center is augmented crews, not automation everywhere. Agents draft; operators decide. Agents propose; field leaders approve. We move faster—and we stay trustworthy.

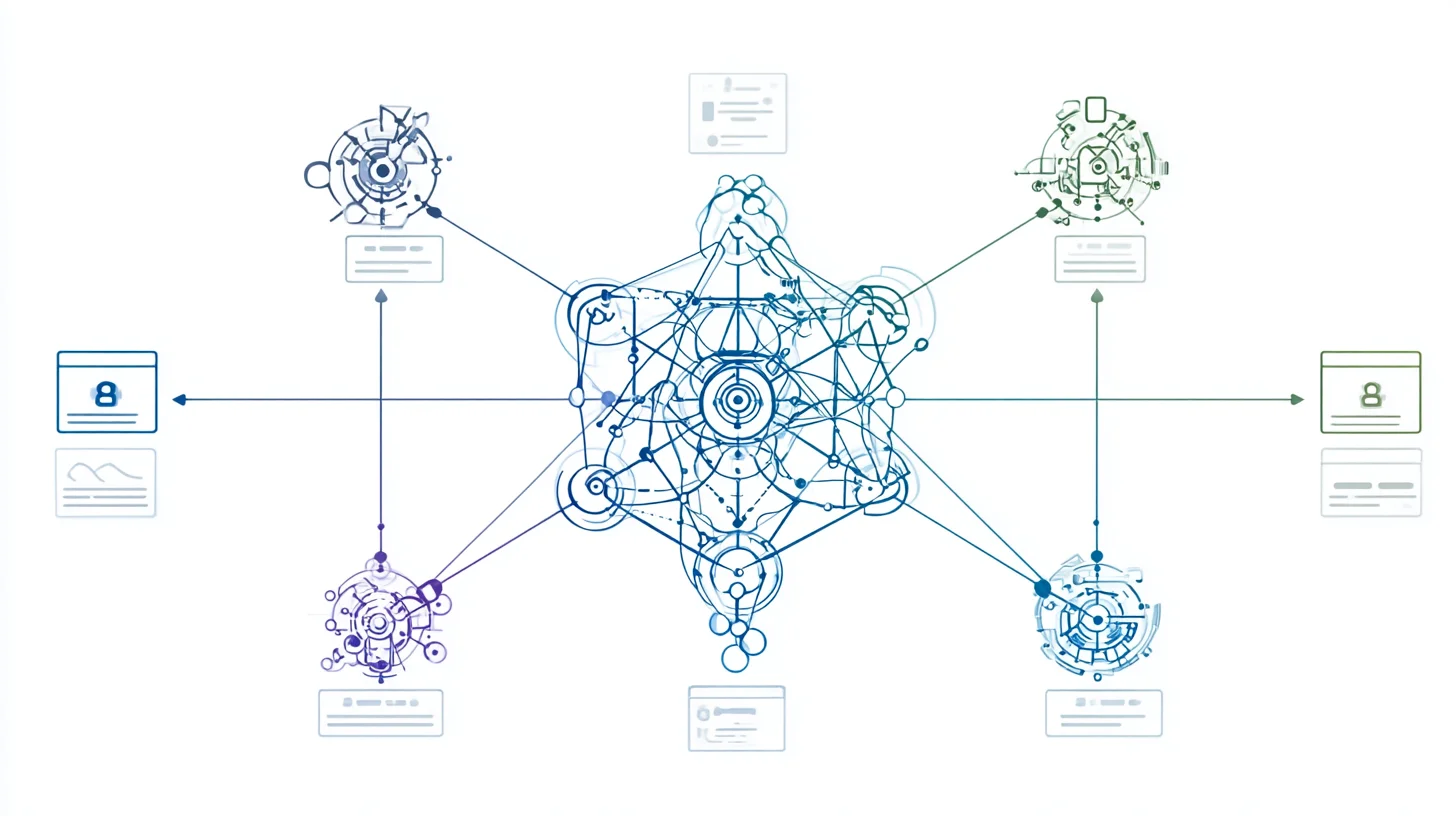

The AI↔Energy Flywheel

Picture the East‑LA Interchange: lanes of generation, load, markets, and safety all crossing at once. If we try to eyeball it at speed, we miss an exit and cause a pile‑up. If we instrument it—clear signage, live routing, ramp meters—we move more cars, safely. AI is the instrumentation. The grid is the concrete. Stewardship is driving with care.

Economically, as the cost of intelligence converges toward electricity, our price, carbon profile, and reliability directly shape the region’s attractiveness to data‑rich industries. That means the “soft” work of policy alignment and the “hard” work of steel in the ground both point to the same outcome: dependable, clean power that stays on.

Risk doesn’t vanish; it changes shape. Concentrated load changes contingency planning. Agents introduce model error, drift, and cyber surfaces we must monitor and test. Market volatility can amplify mistakes. Our answer is familiar: guardrails. We need to red‑team agents before they touch live systems. We bind them to scopes, log everything they do, and keep the big red kill‑switch within reach. We align with NERC/CIP rigor and show our work in audits. Instrumentation enables confidence, not complacency.

The Workforce Story

This is a story about augmented employees, not replaced and abandoned employees. Dispatchers get a switching‑plan draft while they handle the weather. Troublemen receive work packages with material lists and permit notes already assembled. Vegetation teams see prioritized spans pulled from imagery and risk maps. Planners get cost‑and‑risk scenarios for undergrounding proposals at the click of a button. CX reps receive suggested responses and enrollment steps that turn a frustrated call into a useful conversation. People still make the decisions. The work gets lighter and faster.

To do this well, we name the new roles that make it real. An “Agent Wrangler” owns performance, safety cases, and KPIs; they are like managers, but for virtual employees. A Safety & Compliance Engineer encodes our requirements, standards and limits into the agents so they don’t guess. An AI Reliability Engineer watches for drift, sets up observability, and rolls back cleanly when needed. A Data Steward manages quality contracts and lineage; a Model Risk Staff ensures we maintain governance and pass audits without drama. This is not bureaucracy. This is how we clear the highway of everything that can slow us down and keep speed and safety in the same lane.

Change management is where trust is earned. We treat agents like employees: we invest in them over time, grow their skills, assign meaningful work, and measure their performance. We start in a sandbox (read‑only), move to shadow mode, then to supervised execution. We work with agents that don’t beat baselines. We reward safe adoption and frontline feedback. And we use one simple, sturdy analogy to hold the line: unit tests are seatbelts—cheap, automatic, always buckled. No rollouts without them.

Moats That Matter in the Age of AGI

When intelligence is abundant, what endures? Unique operational data—safely governed and shaped into clean data products—remains a moat. Our telemetry, imagery, asset histories, and field notes are not generic. When curated and consented properly, they let our agents see what no one else can. Here the right picture is the aqueduct: a data pipeline as an aqueduct—a clean source, a steady flow, measured loss, and shared access points. It’s ancient wisdom in modern form.

Real‑time grid integration is a second moat. Turning intelligence into action means touching devices, markets, and safety constraints in the moment, not after the fact. The teams who can bind agent recommendations to operational systems without cutting corners will feel the difference on windy nights and hot afternoons.

And then there is speed of adaptation, which is mostly culture. Advantage comes from the cycle time between idea, agent, and production—with safety cases and audit trails by default. That’s a rhythm we can teach and a habit we can reward. Finally, community trust is the moat that makes the rest worth having. In wildfire country, trust is earned in the daylight: transparent communications, fair prioritization, consistent follow‑through. If the community believes we are both competent and caring, we will be granted the benefit of the doubt when we need it most.

Policy, Community, and Ethics

We aim for guardrails without gridlock. Over‑regulation can stall safety‑positive innovation; under‑regulation can harm people. The path between them is transparency and partnership. We align early and openly with our regulators, and we publish model cards, decision logs, and safety cases for agents that touch the grid. We invite third‑party validation where stakes are highest—wildfire mitigation, PSPS scope, restoration practices—so our confidence is shared, not assumed.

Partnership over posture means working with market and regulatory bodies on agent auditing, flexible‑compute tariffs, and DER orchestration standards. It also means plain language. When agents influence a PSPS decision or a vegetation priority, we can explain the “why” in English and Spanish, without jargon, with humility. We are not hiding a black box; we are showing our work.

Fairness remains a lived commitment, not a slogan. We test for bias in outage communications and vegetation prioritization. We make sure undergrounding and hardening choices do not disadvantage vulnerable communities. We publish accessible scorecards that show progress and areas to improve. And because protections fade, we re‑apply them like sunscreen—periodic red‑teaming, retraining, recalibration, and community feedback loops. Sun and wind return each season; so do our disciplines.