From Guesswork to Geometry: How We Measured the Mind

When my daughters were younger, I’d sit at the kitchen table while they did their homework. One would be sounding out words, the other trying to remember how fractions worked—both frustrated in their own ways. I’d remind them, “You don’t have to know everything. You just have to keep getting better. Make stubbornness your superpower. If you keep at it, and growing, you will get there, and in many cases grow beyond all your friends and peers.”

Some nights they’d ask, “Dad, how come you are so smart?”

I never had a clean answer. Grades? I got horrible grades. Tests? I did poorly on tests. Curiosity? Yes. I was curious, and I made up my mind to keep asking—and answering—the right questions. Maybe that’s it: asking the right questions year after year—questions that show not just memory but understanding, and thus growth. Growth that wasn’t a straight line; jagged at first, then slowly more even.

That’s what this year’s story about Artificial Intelligence feels like—humanity at its own kitchen table, staring at the homework, finally making sense of those fractions.

The Year We Measured the Mind

Last year, when people spoke of AI, it felt like talking about the horizon—everyone could see it, but no one could measure the distance. We had metaphors and milestones, prophets and pundits. What we didn’t have was a ruler.

This year, the AGI Definition Project handed us one.

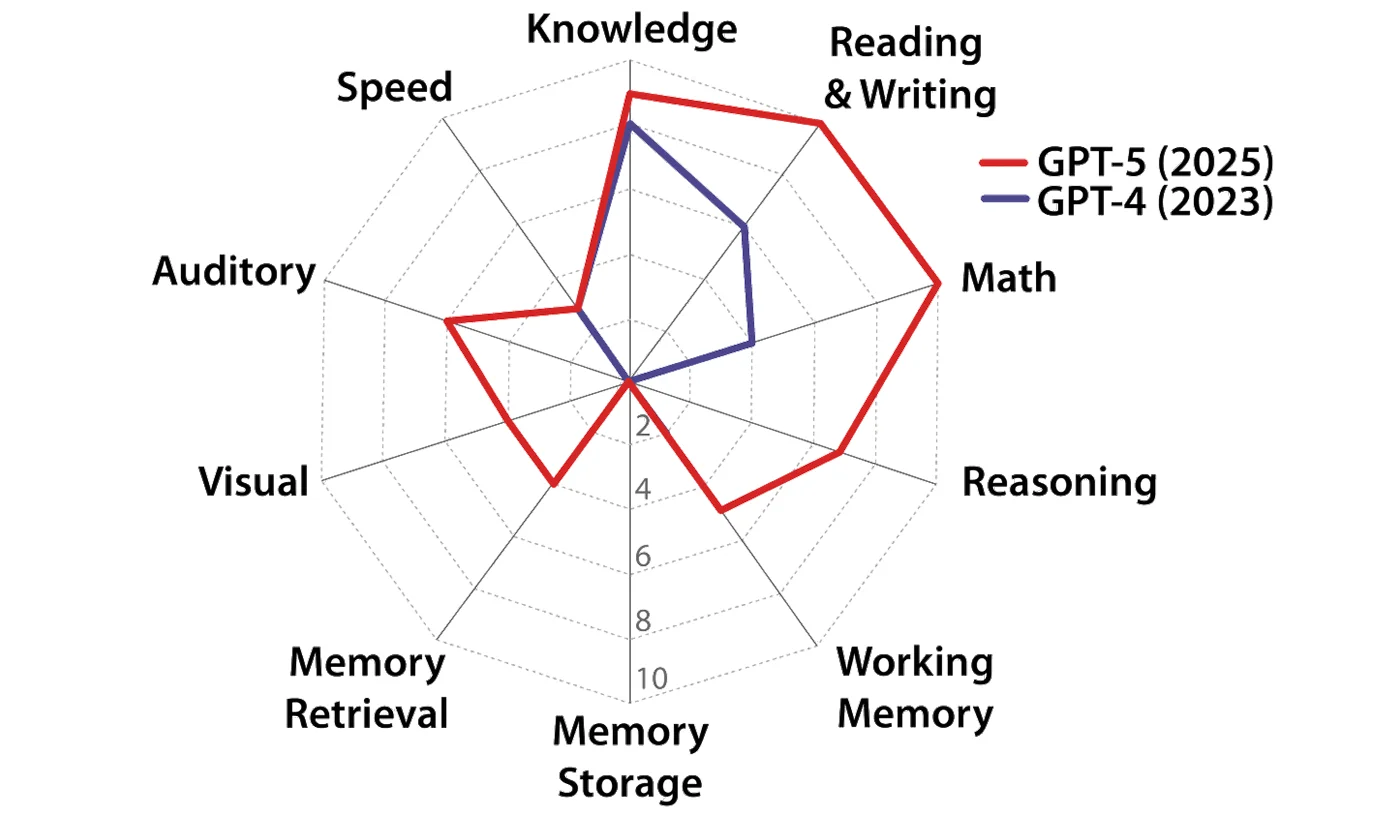

Researchers collaborated on a test grounded in the Cattell‑Horn‑Carroll theory of human cognition and mapped ten domains of general intelligence: reasoning, memory, perception, knowledge, language, math, and more. Each domain is scored not in vague metaphors, but in human terms.

Here’s the striking part: a score of 100% doesn’t mean perfection; it means parity with a well‑educated adult—someone holding a college degree in that domain.

If an AI ever earns 100% in mathematics, it wouldn’t just “do math well.” It would think mathematically as deeply and flexibly as a trained mathematician. If it reached 100% in long‑term memory, it would recall and connect experiences with the quiet grace of a human mind.

The ruler has been marked—not in zeros and ones, but in diplomas and lived experience.

What the Chart Shows

The attached radar chart tells a story we can see instead of merely sense. GPT‑4 sits closer to the center, its line tight and tentative, like a sketch of potential. GPT‑5, by contrast, stretches outward in bold red strokes—confident in some directions, fragile in others.

Its overall AGI score—58%, up from 27% the year before—shows undeniable progress. Yet the shape remains uneven. Today’s models shine in knowledge, reasoning, and language, yet falter in long‑term memory and associative recall—the quiet engines that let thought persist and mature.

It’s like a brilliant student who aces every test but can’t find their backpack or remember your name the next day. To be fair, we’ve all known gifts with gaps. We adapt. We help. We learn to work together.

Last year’s conversation was mostly about capability: Can it write code? Pass exams? Win debates? This year, the question matures; it’s about cognition across capabilities: Does it think the way we do? Does it generalize, adapt, and learn across contexts?

By grounding AI evaluation in psychometrics—the same tools used to study human intelligence—the AGI Definition Project shifts the narrative. Intelligence is no longer a magic trick. It’s a measurable spectrum that reveals not only how far AI has come, but how unevenly it’s grown.

In defining machine intelligence, we inevitably define ourselves. The framework doesn’t just ask how “smart” an AI is; it asks what kind of mind we consider educated.

So the shift from last year to this one is not just numerical—it’s philosophical. We’ve moved from wondering if machines can think to asking what thinking truly is.

Working with Uneven Intelligence

The next question is whether we’ll adapt to working with AI that is brilliant in some areas and weak in others—just as we do with people. How will we build systems and habits that let our strengths cover one another’s gaps? Will we demand human parity across all domains before we entrust important work, or will we invite AI out of the party‑trick phase and into collaboration?

The new skill is learning the “people skills” of working with AI. What is our AI IQ, and how can we raise it? Think of a zipper merge on the 405: sequence and patience matter. When humans and models take turns with clarity, everyone moves faster.

GPT‑5 may be halfway to human parity, but the line it draws—radiant, unfinished—reminds us that the story of intelligence, artificial or otherwise, is still unfolding. Like us, it’s a work in progress.

Epilogue: The Long Game of Growth

When I watch my kids study now, I still see those uneven lines of progress that mark every learning mind. A strength in language here, a gap in memory there. It’s rarely symmetrical, but it’s always alive.

Maybe that’s the real lesson of this year’s chart. Intelligence—human or machine—isn’t about being perfect in every category. It’s about growing toward the best version we’re called to be, filling valleys without flattening peaks.

Someday—perhaps within the next year or so—an AI will reach that 100% mark: college‑level mastery across the board. When it does, I hope it remembers the uneven years, the wonder of becoming. I hope it looks back with empathy—for itself, and for those of us still climbing the steep slopes of our own minds.

Because that, more than any score, is the most human quality of all.